Twitter’s new proprietor, Elon Musk, is feverishly promoting his “Twitter information”: chosen inside communications from the agency, laboriously tweeted out by sympathetic amanuenses. however Musk’s apparent conviction that he has launched some partisan kraken is mistaken — faraway from conspiracy or systemic abuse, the information are a helpful peek behind the scenes of moderation at scale, hinting on the Sisyphean labors undertaken by every social media platform.

For a decade firms like Twitter, YouTube, and fb have carried out an elaborate dance to maintain the particulars of their moderation processes equally out of attain of dangerous actors, regulators, and the press.

to disclose an extreme quantity of could be to current the processes to abuse by spammers and scammers (who certainly reap the advantages of every leaked or revealed element), whereas to disclose too little ends in damaging studies and rumors as they lose administration over the narrative. in the meantime they should be ready to justify and doc their strategies or menace censure and fines from authorities our bodies.

The end result’s that whereas everyone is aware of a little about how precisely these firms research, filter, and arrange the content material posted on their platforms, it’s simply passable to make constructive that what we’re seeing is barely the tip of the iceberg.

usually there are exposés of the strategies we suspected — by-the-hour contractors clicking by way of violent and sexual imagery, an abhorrent however apparently obligatory commerce. usually the firms overplay their arms, like repeated claims of how AI is revolutionizing moderation, and subsequent studies that AI methods for this aim are inscrutable and unreliable.

What almost by no means occurs — usually firms don’t try this till they’re compelled to — is that the exact devices and processes of content material moderation at scale are uncovered with no filter. And that’s what Musk has performed, maybe to his personal peril, however certainly to the good curiosity of anyone who ever puzzled what moderators truly do, say, and click on on as they make decisions that will have an effect on hundreds and hundreds.

Pay no consideration to the honest, complicated dialog behind the scenes

the e-mail chains, Slack conversations, and screenshots (or reasonably photographs of screens) launched over the previous week current a glimpse at this important and poorly understood course of. What we see is a little bit of the uncooked supplies, which simply is not the partisan illuminati some anticipated — although it is clear, by its extremely selective presentation, that this what we’re presupposed to understand.

faraway from it: the people involved are by turns cautious and assured, sensible and philosophical, outspoken and accommodating, exhibiting that the a quantity of to restrict or ban simply isn’t made arbitrarily however in line with an evolving consensus of opposing viewpoints.

main as a lot as a end result of the a quantity of to briefly prohibit the Hunter Biden laptop computer story — in all likelihood at this level primarily the most contentious moderation choice of the earlier couple of years, behind banning Trump — there’s neither the partisanship nor conspiracy insinuated by the bombshell packaging of the paperwork.

as one other we uncover critical, considerate people making an try to reconcile conflicting and insufficient definitions and insurance coverage policies: What constitutes “hacked” supplies? How assured are we on this or that evaluation? what’s a proportionate response? How ought to we discuss it, to whom, and when? What are the implications if we do, if we don’t restrict? What precedents can we set or break?

The options to these questions are on no account apparent, and are the form of factor usually hashed out over months of evaluation and dialogue, and even in courtroom (authorized precedents have an effect on authorized language and repercussions). they usually wished to be made quick, earlier than the state of affairs bought uncontrolled circuitously. Dissent from inside and with out (from a U.S. consultant no much less — paradoxically, doxxed inside the thread collectively with Jack Dorsey in violation of the selfsame coverage) was thought of and truthfully constructed-in.

“that is an rising state of affairs the place the particulars stay unclear,” said Former notion and safety Chief Yoel Roth. “We’re erring on the facet of collectively with a warning and stopping this content material from being amplified.”

Some question the a quantity of. Some question the particulars as they’ve been supplied. Others say it’s not supported by their studying of the coverage. One says they should make the advert-hoc basis and extent of the movement very clear because it will clearly be scrutinized as a partisan one. Deputy fundamental Counsel Jim Baker requires extra information however says warning is warranted. There’s no clear precedent; the particulars are at this level absent or unverified; simply a few of the supplies is plainly non-consensual nude imagery.

“I think about Twitter itself ought to curtail what it recommends or areas in trending information, and your coverage in direction of QAnon teams is all good,” concedes Rep. Ro Khanna, whereas additionally arguing the movement in question is a step too far. “It’s a extremely effective stability.”

Neither the whole public nor the press have been aware of these conversations, and the exact actuality is we’re as curious, and largely as on the hours of darkness, as our readers. it will in all likelihood be incorrect to name the revealed supplies a complete and even appropriate illustration of the complete course of (they’re blatantly, if ineffectively, picked and chosen to go well with a narrative), however even reminiscent of they’re we’re extra educated than we had been earlier than.

devices of the commerce

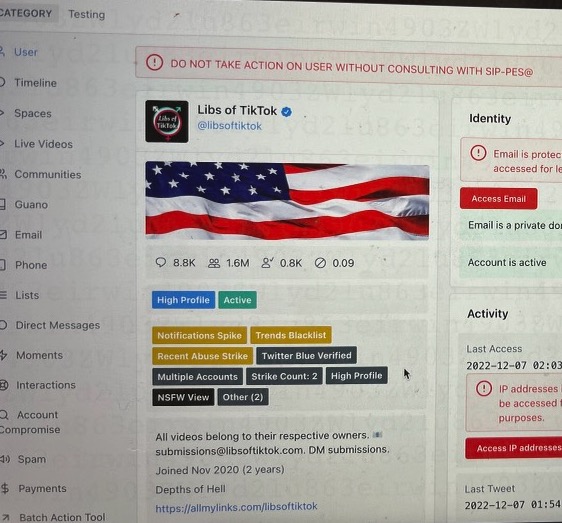

means extra straight revealing was the following thread, which carried screenshots of the exact moderation tooling utilized by Twitter staff. whereas the thread disingenuously makes an try to equate using these devices with shadow banning, the screenshots do not current nefarious exercise, nor want they as a means to be consideration-grabbing.

picture credit: Twitter

pretty the a quantity of, what’s proven is compelling for the very motive that it is so prosaic, so blandly systematic. listed beneath are the diverse methods all social media firms have defined as quickly as extra and as quickly as extra that they use, however whereas earlier than we had it couched in PR’s cheery diplomatic cant, now it is supplied with out remark: “developments Blacklist,” “extreme Profile,” “do not TAKE movement” and the relaxation.

in the meantime Yoel Roth explains that the actions and insurance coverage policies want to be greater aligned, that extra evaluation is required, that plans are underway to reinforce:

The hypothesis underlying a lot of what we’ve carried out is that if publicity to, e.g., misinformation straight causes damage, we ought to always use remediations that scale again publicity, and limiting the unfold/virality of content material is an environment nice method to try this… we’re going to want to make a extra strong case to get this into our repertoire of coverage remediations – particularly for utterly different coverage domains.

as quickly as extra the content material belies the context it is supplied in: these are hardly the deliberations of a secret liberal cabal lashing out at its ideological enemies with a ban hammer. It’s an enterprise-grade dashboard reminiscent of you may see for lead monitoring, logistics, or accounts, being talked about and iterated upon by sober-minded individuals working inside sensible limitations and aiming to fulfill a quantity of stakeholders.

precisely: Twitter has, like its fellow social media platforms, been working for years to make the tactic of moderation environment nice and systematic passable to carry out at scale. Not simply so the platform isn’t overrun with bots and spam, however as a means to adjust to authorized frameworks like FTC orders and the GDPR. (Of which the “intensive, unfiltered entry” outsiders bought to the pictured instrument might properly signify a breach. The related authorities instructed TechCrunch they’re “participating” with Twitter on the matter.)

A handful of staff making arbitrary decisions with no rubric or oversight isn’t any method to common effectively or meet such authorized requirements; neither (as a end result of the resignation of a quantity of on Twitter’s notion & safety Council at the second testifies) is automation. you want a huge community of people cooperating and dealing in line with a standardized system, with clear boundaries and escalation procedures. And that’s definitely what seems to be proven by the screenshots Musk has triggered to be revealed.

What isn’t proven by the paperwork is any form of systematic bias, which Musk’s stand-ins insinuate however don’t pretty handle to substantiate. however whether or not or not it matches into the narrative they want it to, what’s being revealed is of curiosity to anyone who thinks these firms should be extra forthcoming about their insurance coverage policies. That’s a win for transparency, even when Musk’s opaque method accomplishes it form of accidentally.

0 Comments